October 4, 2023

From UnderwriteMe’s laptop to yours: How underwriting automation software gets built

At UnderwriteMe, we pride ourselves on the reliability of our services. It’s not just a matter of pride, it’s also a contractual obligation. Making our services available, whether as the Protection Platform or the Decision Platform requires the talents of many people across the engineering teams.

Everyone knows that developers write code. That code gets turned into an application. But how does the application that a developer built get shipped into something that runs on a server for users?

Ten years ago, when UnderwriteMe first started, the Protection Platform and Underwriting Engine were parts of the same big application, known as a monolith. This might sound simple, but it means that if you change one part, you will also deploy a new version of another part, this can have unintentional side effects. Since then we’ve split multiple components out of the monolith and our application base is now composed of many micro-services. Having many smaller services running and talking to each other allows us to make targeted changes faster and with less risk, but also introduces new complexities when deploying the applications to servers.

When the Internet first took off, applications would be manually installed on servers. The operations team would connect to a server, and then install and launch the application. This process wasn’t dissimilar to the way software would be installed on a laptop. By the time UnderwriteMe started this process was becoming automated which is a big help when you’re deploying dozens of services. Changes to the servers that run applications could be made in the same way that developers make changes to application code. And just like changes to application code, these can be peer reviewed and tested to reduce the potential for issues.

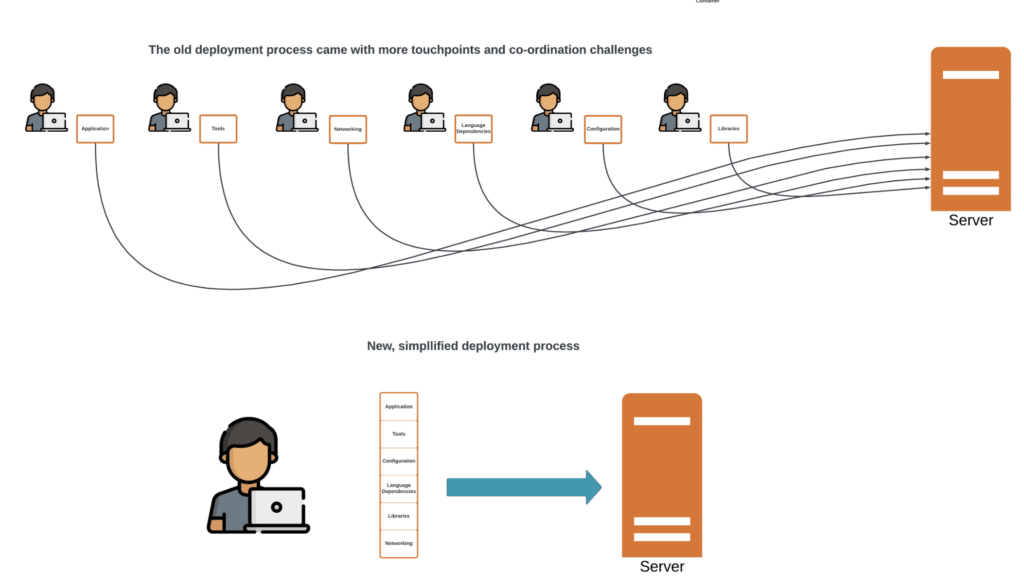

This made a massive improvement to the reliability of services on the internet – not just at UnderwriteMe, but the process was still fairly complex. When running services, it’s not just a matter of installing the application. The operations team would typically have to manage the servers operating system (OS) as well as ensuring any application dependencies, i.e. tools available on the server, libraries (collections of reusable code), configuration, permissions, and more, are all installed correctly. This gave rise to the “it works on my PC” phenomenon. A developer might have written accurate, well tested code that works when tested locally or even in a non-production environment, but when deployed to production, users experience problems.

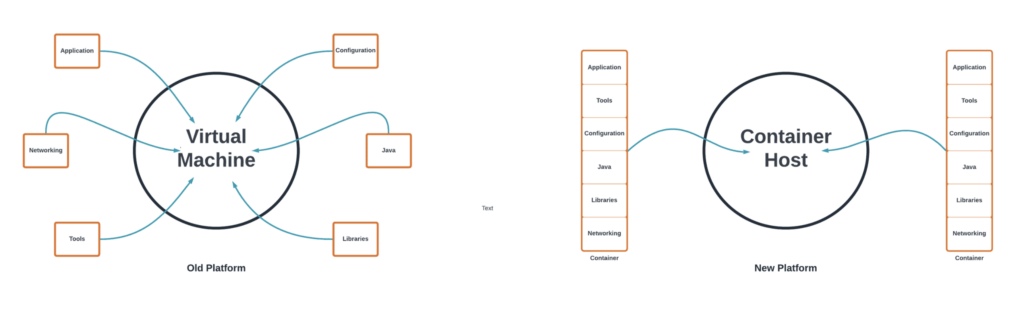

Enter Containerisation. UnderwriteMe has always used cloud infrastructure services. Cloud providers take big servers and subdivide them into smaller servers known as virtual machines (VMs) and make them available for rent over short or long timeframes. If you logged on to the server, you’d think it was a stand-alone physical machine, but it’s not. Once the cloud provider creates the VM, we can start it up by loading a disk image, which is just a backup of an empty server. From there, the application and its dependencies are installed and the service is available to clients.

We regularly upgrade clients to newer versions of our applications, but migrating to the new platform is much more complex than a typical upgrade. In addition to the applications migrating, the databases are too. We collect, store and transmit far too much data to store the data in regular files, so instead we use a database, which organises the data into a set of spreadsheet-like tables. You can’t move the database itself from the old platform to the new platform, so when we migrate, we also have to copy and validate the data in the databases. We follow a comprehensive playbook to make sure that no customer data is accidentally changed or lost in the migration. This is often a complex and time consuming process so it’s usually done overnight.

Containerisation adds a layer of abstraction on top of this. The application is packaged up with its own operating system and all dependencies. This can be thought of as being synonymous to a shipping container which is used to make loading and unloading of physical goods at ports faster, easier and standardised.

Instead of working with the host VM’s OS, tools, or libraries, it uses the ones that are packaged up with it. As far as the host VM is concerned, it’s just running a plain container process. This means that when developers run the application locally, where they can test changes or find bugs, the application runs exactly as it does in production.

The benefits of containerisation aren’t limited to improved application reliability. Deploying an application in the VM world meant managing the host OS, deploying the application, its configuration, aligning that with network configuration (such as firewall rules) and security processes. These were all handled by different systems, and sometimes different teams. By simplifying and aligning the release process we can remove scope for errors and deliver consistently safe, repeatable upgrades. Because everything about the deployments is under the management of the development team, the people closest to the application are able to make sure that we’re deploying in the best way possible.

In addition to containers the new platform uses orchestration tools which further automate the deployment and management of applications by handling services surrounding a container. If an instance becomes slow, a new one will be brought up. If traffic increases, the orchestrator will automatically provision additional instances. This all happens automatically and allows us to resolve issues before they impact users. The new approach also comes with improved monitoring and logging infrastructure which allows our support and product teams to better see how our applications are performing and speed up the resolution time of issues.

These changes reflect years of work from developers and non-developers and will help keep our services as healthy and reliable as possible for our customers as we expand into the next phase of our growth – and keep downtime well below the contractual limit.